A fast geo database with Google S2 take #2

- Golang

Six months ago, I wrote on this blog about Geohashes and LevelDB with Go, to create a fast geo database.

This post is very similar as it works the same way but replacing GeoHashes with Google S2 library for better performances.

There is an S2 Golang implementation maintened by Google not as complete as the C++ one but close.

For the storage this post will stay agnostic to avoid any troll, but it applies to any Key Value storages: LevelDB/RocksDB, LMDB, Redis…

I personnaly use BoltDB and gtreap for my experimentations.

This post will focus on Go usage but can be applied to any languages.

Or skip to the images below for visual explanations.

Why not Geohash?

Geohash is a great solution to perform geo coordinates queries but the way it works can sometimes be an issue with your data.

-

Remember geohashes are cells of 12 different widths from 5000km to 3.7cm, when you perform a lookup around a position, if your position is close to a cell’s edge you could miss some points from the adjacent cell, that’s why you have to query for the 8 neightbour cells, it means 9 range queries into your DB to find all the closest points from your location.

-

If your lookup does not fit in level

439km by 19.5km, the next level is 156km by 156km! -

The query is not performed around your coordinates, you search for the cell you are in then you query for the adjacent cells at the same level/radius, based on your needs, it means it works very approximately and you can only perform ‘circles’ lookup around the cell you are in.

-

The most precise geohash needs 12 bytes storage.

-

-90 +90 and +180 -180, -0 +0 are not sides by sides prefixes.

Why S2?

S2 cells have a level ranging from 30 ~0.7cm² to 0 ~85,000,000km².

S2 cells are encoded on an uint64, easy to store.

The main advantage is the region coverer algorithm, give it a region and the maximum number of cells you want, S2 will return some cells at different levels that cover the region you asked for, remember one cell corresponds to a range lookup you’ll have to perform in your database.

The coverage is more accurate it means less read from the DB, less objects unmarshalling…

Real world study

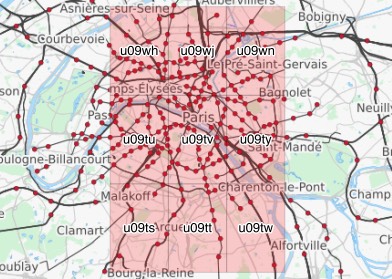

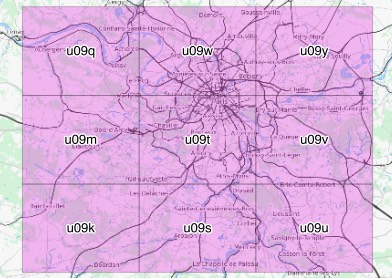

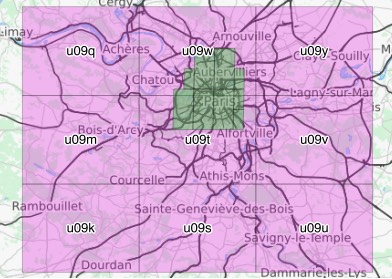

We want to query for objects inside Paris city limits using a rectangle:

Using level 5 we can’t fit the left part of the city.

We could add 3 cells (12 total DB queries ) on the left but most algorithms will zoom out to level 4.

But now we are querying for the whole region.

Using s2 asking for 9 cells using a rectangle around the city limits.

The zones queried by Geohash in pink and S2 in green.

Example S2 storage

Let’s say we want to store every cities in the world and perform a lookup to find the closest cities around, first we need to compute the CellId for each cities.

// Compute the CellID for lat, lng

c := s2.CellIDFromLatLng(s2.LatLngFromDegrees(lat, lng))

// store the uint64 value of c to its bigendian binary form

key := make([]byte, 8)

binary.BigEndian.PutUint64(key, uint64(c))

Big endian is needed to order bytes lexicographically, so we can seek later from one cell to the next closest cell on the Hilbert curve.

c is a CellID to the level 30.

Now we can store key as the key and a value (a string or msgpack/protobuf) for our city, in the database.

Example S2 lookup

For the lookup we use the opposite procedure, first looking for one CellID.

// citiesInCellID looks for cities inside c

func citiesInCellID(c s2.CellID) {

// compute min & max limits for c

bmin := make([]byte, 8)

bmax := make([]byte, 8)

binary.BigEndian.PutUint64(bmin, uint64(c.RangeMin()))

binary.BigEndian.PutUint64(bmax, uint64(c.RangeMax()))

// perform a range lookup in the DB from bmin key to bmax key, cur is our DB cursor

var cell s2.CellID

for k, v := cur.Seek(bmin); k != nil && bytes.Compare(k, bmax) <= 0; k, v = cur.Next() {

buf := bytes.NewReader(k)

binary.Read(buf, binary.BigEndian, &cell)

// Read back a city

ll := cell.LatLng()

lat := float64(ll.Lat.Degrees())

lng := float64(ll.Lng.Degrees())

name = string(v)

fmt.Println(lat, lng, name)

}

}

Then compute the CellIDs for the region we want to cover.

rect := s2.RectFromLatLng(s2.LatLngFromDegrees(48.99, 1.852))

rect = rect.AddPoint(s2.LatLngFromDegrees(48.68, 2.75))

rc := &s2.RegionCoverer{MaxLevel: 20, MaxCells: 8}

r := s2.Region(rect.CapBound())

covering := rc.Covering(r)

for _, c := range covering {

citiesInCellID(c)

}

RegionCoverer will return at most 8 cells (in this case 7 cells: 4 8, 1 7, 1 9, 1 10) that is guaranteed to cover the given region, it means we may have to exclude cities that were NOT in our rect, with func (Rect) ContainsLatLng(LatLng).

Congrats we have a working geo db.

S2 can do more with complex shapes like Polygons and includes a lot of tools to compute distances, areas, intersections between shapes…

Here is a Github repo for the data & scripts generated for the images